ChatGPT like Interface with Open WebUI and Ollama

Posted in Recipe on December 25, 2023 by Venkatesh S ‐ 4 min read

ChatGPT like Interface with Open WebUI and Ollama

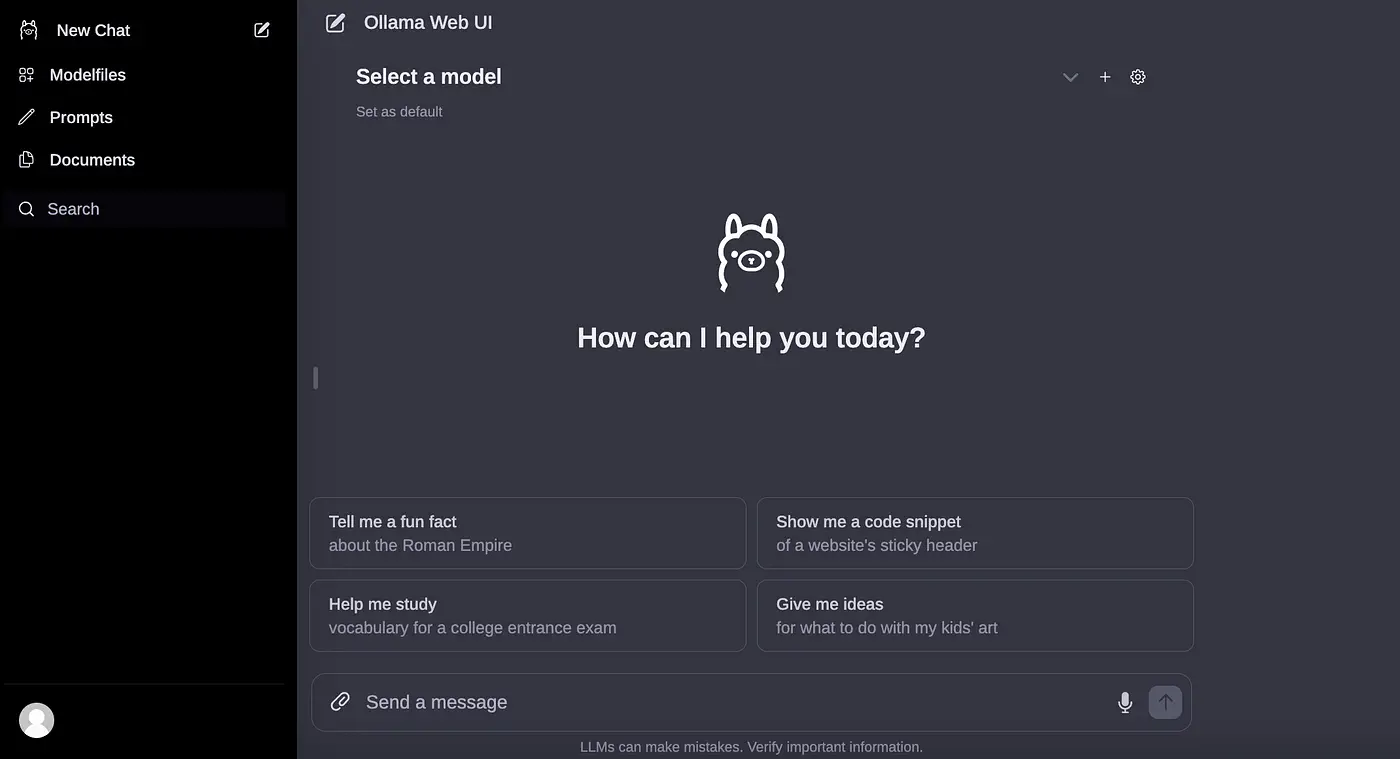

I have been trying out on setting up a local LLM and building various services around the same. I looked at various UI options similar to chatgpt and found an amazing opensource project called Open Web UI.

In today’s article, we will try to set it up and point it to a locally running LLM. I have used Ollama for this example. This is a pre-requisite to try this out. If you want to know to setup Ollama and bring it up locally, refer this article on Ollama : Get up and running with Large Language Models locally

You might be wondering why you should be doing this? Well, if you want a private setup with a local model and a chatgpt kind of interface try this out.

What is Open Web UI?

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs.

Features of Open Web UI

Some of the key features I found useful are as below.

🖥️ Intuitive Interface: The chat interface takes inspiration from ChatGPT, ensuring a user-friendly experience.

📱 Responsive Design: Enjoy a seamless experience on both desktop and mobile devices.

⚡ Swift Responsiveness: Enjoy fast and responsive performance.

🚀 Effortless Setup: Install seamlessly using Docker or Kubernetes (kubectl, kustomize or helm) for a hassle-free experience.

💻 Code Syntax Highlighting: Enjoy enhanced code readability with our syntax highlighting feature.

📚 Local RAG Integration: Dive into the future of chat interactions with the groundbreaking Retrieval Augmented Generation (RAG) support. This feature seamlessly integrates document interactions into your chat experience. You can load documents directly into the chat or add files to your document library, effortlessly accessing them using # command in the prompt.

🌐 Web Browsing Capability: Seamlessly integrate websites into your chat experience using the # command followed by the URL. This feature allows you to incorporate web content directly into your conversations, enhancing the richness and depth of your interactions.

🏷️ Conversation Tagging: Effortlessly categorize and locate specific chats for quick reference and streamlined data collection.

📥🗑️ Download/Delete Models: Easily download or remove models directly from the web UI.

🤖 Multiple Model Support: Seamlessly switch between different chat models for diverse interactions.

🔄 Multi-Modal Support: Seamlessly engage with models that support multimodal interactions, including images (e.g., LLava).

💬 Collaborative Chat: Harness the collective intelligence of multiple models by seamlessly orchestrating group conversations. Use the @ command to specify the model, enabling dynamic and diverse dialogues within your chat interface. Immerse yourself in the collective intelligence woven into your chat environment.

📜 Chat History: Effortlessly access and manage your conversation history.

📤📥 Import/Export Chat History: Seamlessly move your chat data in and out of the platform.

🗣️ Voice Input Support: Engage with your model through voice interactions; enjoy the convenience of talking to your model directly. Additionally, explore the option for sending voice input automatically after 3 seconds of silence for a streamlined experience.

⚙️ Fine-Tuned Control with Advanced Parameters: Gain a deeper level of control by adjusting parameters such as temperature and defining your system prompts to tailor the conversation to your specific preferences and needs.

🎨🤖 Image Generation Integration: Seamlessly incorporate image generation capabilities using AUTOMATIC1111 API (local) and DALL-E, enriching your chat experience with dynamic visual content.

🤝 OpenAI API Integration: Effortlessly integrate OpenAI-compatible API for versatile conversations alongside Ollama models. Customize the API Base URL to link with LMStudio, Mistral, OpenRouter, and more.

✨ Multiple OpenAI-Compatible API Support: Seamlessly integrate and customize various OpenAI-compatible APIs, enhancing the versatility of your chat interactions.

🔗 External Ollama Server Connection: Seamlessly link to an external Ollama server hosted on a different address by configuring the environment variable.

👥 Multi-User Management: Easily oversee and administer users via our intuitive admin panel, streamlining user management processes.

🔐 Role-Based Access Control (RBAC): Ensure secure access with restricted permissions; only authorized individuals can access your Ollama, and exclusive model creation/pulling rights are reserved for administrators.

Installation using Docker

Please note that the following instructions assume that the latest version of Ollama is already installed on your machine. If not, please refer to Ollama : Get up and running with Large Language Models locally

Run the following command

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Now if you access the URL http://localhost:8080 you should be able to view the UI as shown above.