BentoML : A faster way to ship your models to production

Posted in Recipe on February 14, 2023 by Venkatesh S ‐ 6 min read

Typical ML Model Build Deployment Process

I have tried building and deploying ML models in production in some of my customer engagements. The typical lifecycle I follow is as follows.

Using pybuilder to create a new project

Building and Training the model using sklearn

Store model into a persistant store (disk) using pickle or joblib

Build an API layer to access the model (predict)

Load the model, return results

Further, Package the model as pip installable file (wheel file) for resuability

Create docker containers and deploy the model

If you see this, you can notice that the data science teams needs to have expertise in many other aspects of software development and deployment and not just model building.

BentoML Build Deployment Process

Imagine a framework that enables you to achieve everything mentioned above, but abstracts out the whole process of development and deployment. Let’s welcome BentoML

The whole process of building and deploying the machine learning models using BentoML is as follows.

Saving the models with BentoML

Exposing the models as API services

Calling API Services

Loading and Using Models in Services

Production build and deployment of the models

Containerize the Services and deploy

Trying out an example

You can find the Source Code here for all the examples.

Setting up the project

Install all the dependencies using the following commands

pip install bentoml scikit-learn pandas

Saving Models with BentoML

To begin with BentoML, you will need to save your trained models with BentoML API in its model store (a local directory managed by BentoML). The model store is used for managing all your trained models locally as well as accessing them for serving.

import bentoml

from sklearn import svm

from sklearn import datasets

# Load training data set

iris = datasets.load_iris()

X, y = iris.data, iris.target

# Train the model

clf = svm.SVC(gamma='scale')

clf.fit(X, y)

# Save model to the BentoML local model store

saved_model = bentoml.sklearn.save_model("iris_clf", clf)

print(f"Model saved: {saved_model}")

# Model saved: Model(tag="iris_clf:zy3dfgxzqkjrlgxi")

The model is now saved under the name iris_clf with an automatically generated version. The name and version pair can then be used for retrieving the model. For instance, the original model object can be loaded back into memory for testing via:

# load a specific version

model = bentoml.sklearn.load_model("iris_clf:2uo5fkgxj27exuqj")

# Alternatively, use `latest` to find the newest version

model = bentoml.sklearn.load_model("iris_clf:latest")

The bentoml.sklearn.save_model API is built specifically for the Scikit-Learn framework and uses its native saved model format under the hood for best compatibility and performance. Also note that the this can be used for other ML frameworks like PyTorch.

Exposing the models as API services

Services are the core components of BentoML, where the serving logic is defined. Create a service file with the below contents.

import numpy as np

import bentoml

from bentoml.io import NumpyNdarray

iris_clf_runner = bentoml.sklearn.get("iris_clf:latest").to_runner()

svc = bentoml.Service("iris_classifier", runners=[iris_clf_runner])

@svc.api(input=NumpyNdarray(), output=NumpyNdarray())

def classify(input_series: np.ndarray) -> np.ndarray:

result = iris_clf_runner.predict.run(input_series)

return result

We can now run the BentoML server for the new service in development mode using the following command

bentoml serve service:svc --reload

Now your model is readily being served using an HTTP based API service.

Note that you will be able to deploy your application as gRPC service too. You can use the below command to do the same.

bentoml serve-grpc service:svc --reload --enable-reflection

Calling API Services

Let’s try to call the API using the following command using CURL.

curl -X POST \

-H "content-type: application/json" \

--data "[[5.9, 3, 5.1, 1.8]]" \

http://127.0.0.1:3000/classify

Note that BentoML also provides an UI using which you can send the requests. The UI is accessible at http://127.0.0.1:3000

Loading and Using Models in Services

In case you want to use the above as a service call, bentoml.sklearn.get creates a reference to the saved model in the model store, and to_runner creates a Runner instance from the model. The Runner abstraction gives BentoServer more flexibility in terms of how to schedule the inference computation, how to dynamically batch inference calls and better take advantage of all hardware resource available.

import bentoml

iris_clf_runner = bentoml.sklearn.get("iris_clf:latest").to_runner()

iris_clf_runner.init_local()

iris_clf_runner.predict.run([[5.9, 3., 5.1, 1.8]])

Production build and deployment of the models

Now lets build the model to deploy in production.

Bento is the distribution format for a service. It is a self-contained archive that contains all the source code, model files and dependency specifications required to run the service.

To build a Bento, first create a bentofile.yaml file in your project directory. The content of the file is as follows

service: "service:svc" # Same as the argument passed to `bentoml serve`

labels:

owner: bentoml-team

stage: dev

include:

- "*.py" # A pattern for matching which files to include in the bento

python:

packages: # Additional pip packages required by the service

- scikit-learn

- pandas

Next, run the below command from the same directory

bentoml build

You’ve just created your first Bento, and it is now ready for serving in production! For starters, you can now serve it with the below command.

bentoml serve iris_classifier:latest --production

Bento is the unit of deployment in BentoML, one of the most important artifacts to keep track of in your model deployment workflow.

Containerize the Services

A docker image can be automatically generated from a Bento for production deployment using just a single command.

bentoml containerize iris_classifier:latest

Note that the docker image tag will be same as the Bento tag by default.

You can now run the docker image to start the bento server using the following command. Replace the tag with the generated version of bento.

docker run -it --rm -p 3000:3000 iris_classifier:tag serve --production

This will bring up the server on port 3000 and is accessible now at http://127.0.0.1:3000

Most of the deployment tools built on top of BentoML use Docker under the hood. It is recommended to test out serving from a containerized Bento docker image first, before moving to a production deployment. This helps verify the correctness of all the docker and dependency configs specified in the bentofile.yaml.

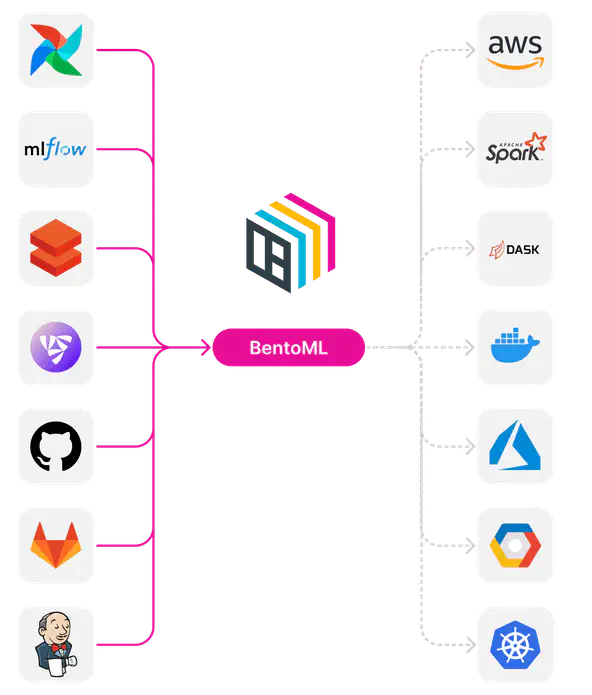

Deploying Bentos

BentoML standardizes the saved model format, service API definition and the Bento build process, which opens up many different deployment options for ML teams.

The Bento we built and the docker image created in the previous steps are designed to be DevOps friendly and ready for deployment in a production environment. If your team has existing infrastructure for running docker, it’s likely that the Bento generated docker images can be directly deployed to your infrastructure without any modification.